Understanding AI's environmental footprint

Join the community

When you ask ChatGPT to summarize an email, create a recipe, or suggest summer vacation spots, it does a ton of processing behind the scenes. This is a form of machine learning called generative AI, which requires a lot of energy for training and for producing answers to your queries.

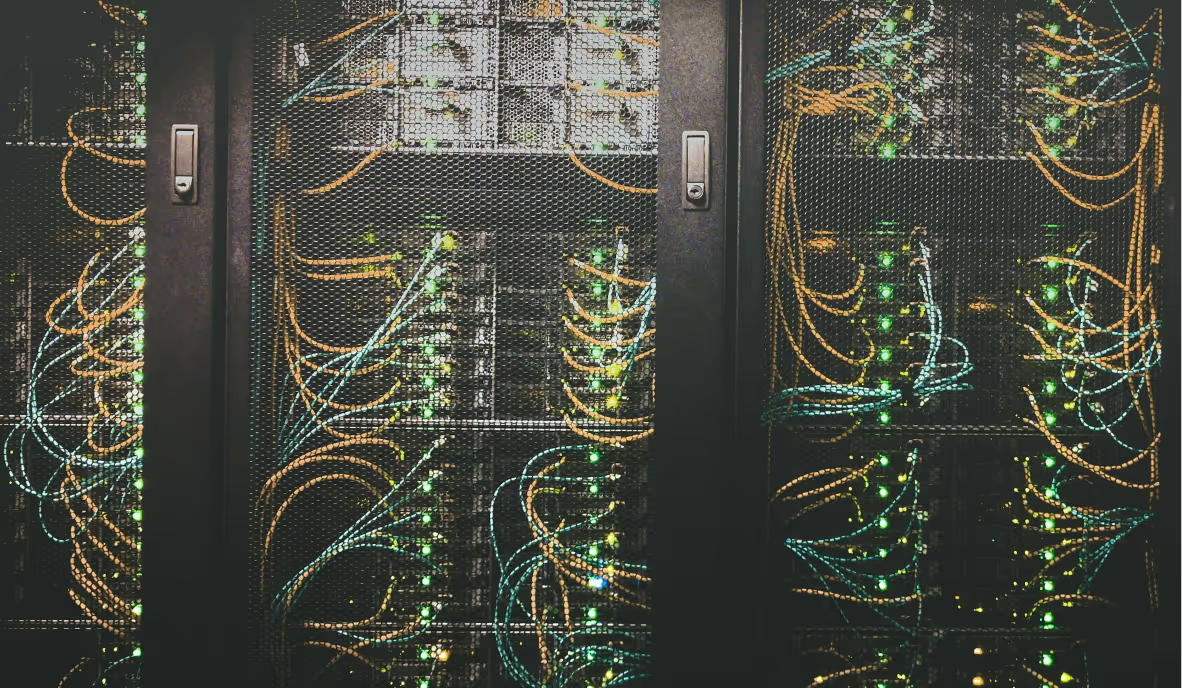

Most of these generative AI models run in data centers that are often built in places that run on fossil fuels. This means that AI models are contributing to rising carbon emissions and the climate crisis.

Calculating AI energy emissions

In January 2024, the International Energy Agency (IEA) included data centers, cryptocurrency, and artificial intelligence in their global energy use forecast for the first time. These sectors represented almost 2% of the world's energy demand in 2022. The IEA predicts this demand could double by 2026. That's equivalent to the total electricity Japan uses.

But how much of this demand would come from AI models alone?

According to the IEA, while a single Google search takes 0.3 watt-hours of electricity, a ChatGPT request takes 2.9 watt-hours. So if ChatGPT queries replace the daily 9 billion searches, the electricity demand would increase by 10 terawatt-hours a year. That’s the amount consumed by about 1.5 million European Union residents.

Alex de Vries, a PhD candidate at VU Amsterdam and writer of the famous blog Digiconomist, did some number crunching on AI's energy use in 2023. He noticed that NVIDIA, the big fish in the AI market with a 95% share, shares energy specs for its hardware and sales predictions. After doing some math, he figured out that by 2027, the AI industry might be using anywhere from 85 to 134 terawatt hours of energy yearly. That's about as much as his home country, the Netherlands, uses in a year.

{{cta-join2}}

AI requires freshwater resources

Data centers get hot because they use so much energy. The usual way to cool them down is by circulating water.

Shaolei Ren, an associate professor of electrical and computer engineering at UC Riverside, has been studying the water costs of computation for the past decade. According to him, when you chat with GPT-3 and get about 10 to 50 responses, you're driving the consumption of a half-liter of fresh water.

In 2022, Google’s data centers consumed about 5 billion gallons of fresh water for cooling. This was 20% more than they did in 2021, and Microsoft’s water use also rose by 34% in the same period.

It shouldn’t be surprising since Google data centers host the Bard chatbot and other generative AIs, and Microsoft servers host ChatGPT as well as its bigger siblings GPT-3 and GPT-4.

So, should we ditch AI and revert to regular search? Not necessarily. The International Organization for Standardization (ISO) is developing sustainable AI standards to be rolled out by late 2024. They're focusing on energy efficiency, raw material use, transportation, and water consumption and devising strategies to lessen AI's environmental footprint. They aim to empower us, the users, to make informed choices about our AI usage.

We should reach a point where we can select a more energy-efficient model and understand the water and carbon impact of each specific request we make. Until then, if a traditional search can get the job done, it's best to stick with it.

.jpg)